In Kanzi Studio projects shader programs are stored as files on the computer hard drive. When you add shaders to the <KanziWorkspace>/Projects/<ProjectName>/Shaders directory of your Kanzi Studio project, Kanzi Studio automatically shows them in the Library > Resource Files > Shaders.

Access to the shader files is useful when you want to change all the stock material types from fragment-based to vertex-based shaders, or the other way around. If the filenames are the same, to do this, replace the shader files with the correct versions on the file system.

The fastest way to generate your own shading is to find a similar use case in the Kanzi material library, and then modify the shader and material properties, or use shaders from the VertexPhong or VertexPhongTextured material types as a template.

You can use the Kanzi Studio Shader Source Editor to edit the shader source code. When working with the Kanzi Studio Shader Source Editor keep in mind that:

The input for shader programs are vertex attributes and uniforms. The attributes can vary per vertex and are provided to vertex shaders. You can use the uniforms as input to either vertex or fragment shaders.

The vertex buffer of a mesh contains also a set of attributes. These vertex buffer attributes are used for sending data to vertex shaders.

Kanzi can automatically pass attributes to shader programs, but you can configure these settings manually too.

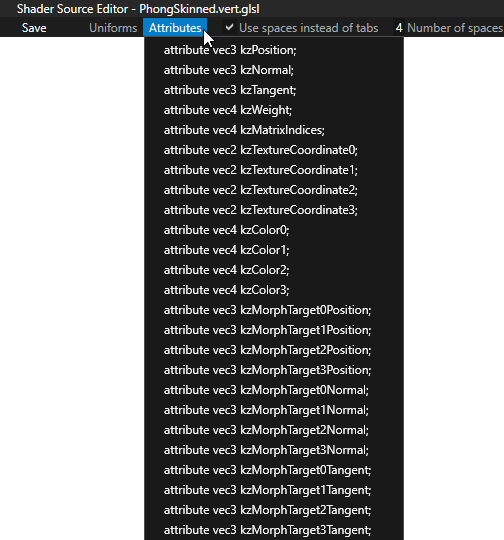

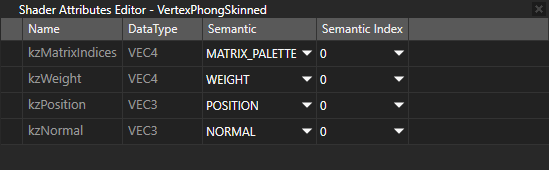

Vertex attributes always have one of these semantics and Kanzi automatically recognizes these names:

| Attribute | Data type | Description |

|---|---|---|

| kzPosition | vec3 | position |

| kzNormal | vec3 | normal |

| kzTangent | vec3 | tangent |

| kzWeight | vec4 | weight |

| kzMatrixIndices | vec4 | matrix palette |

| kzTextureCoordinate0 | vec2 | texture coordinate 0 |

| kzTextureCoordinate1 | vec2 | texture coordinate 1 |

| kzTextureCoordinate2 | vec2 | texture coordinate 2 |

| kzTextureCoordinate3 | vec2 | texture coordinate 3 |

| kzColor0 | vec4 | color 0 |

| kzColor1 | vec4 | color 1 |

| kzColor2 | vec4 | color 2 |

| kzColor3 | vec4 | color 3 |

| kzMorphTarget0Position | vec3 | morph target position 0 |

| kzMorphTarget1Position | vec3 | morph target position 1 |

| kzMorphTarget2Position | vec3 | morph target position 2 |

| kzMorphTarget3Position | vec3 | morph target position 3 |

| kzMorphTarget0Normal | vec3 | morph target normal 0 |

| kzMorphTarget1Normal | vec3 | morph target normal 1 |

| kzMorphTarget2Normal | vec3 | morph target normal 2 |

| kzMorphTarget3Normal | vec3 | morph target normal 3 |

| kzMorphTarget0Tangent | vec3 | morph target tangent 0 |

| kzMorphTarget1Tangent | vec3 | morph target tangent 1 |

| kzMorphTarget2Tangent | vec3 | morph target tangent 2 |

| kzMorphTarget3Tangent | vec3 | morph target tangent 3 |

You can also add custom attributes.

If there is a one-to-one relation between shader attributes and vertex attributes, the attributes are automatically mapped against each other. You can configure the mappings manually to work differently in mesh data. You must use manual mappings if you are using shader attribute names that are not in the default list.

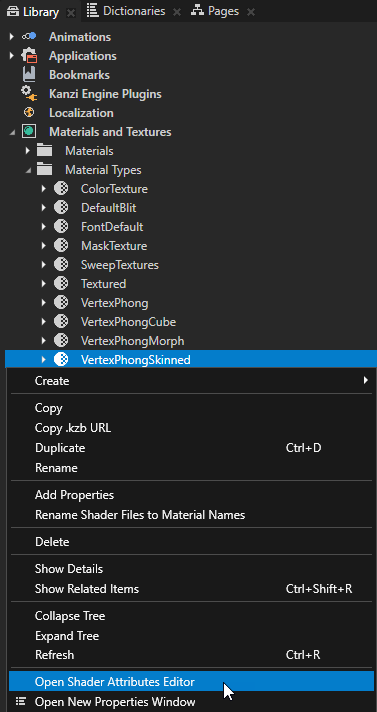

To view and edit the semantics of the shader attributes of a material type, in the Library > Materials and Textures > Material Types right-click a material type and select Open Shader Attributes Editor.

Shader uniforms can receive their data from these data sources:

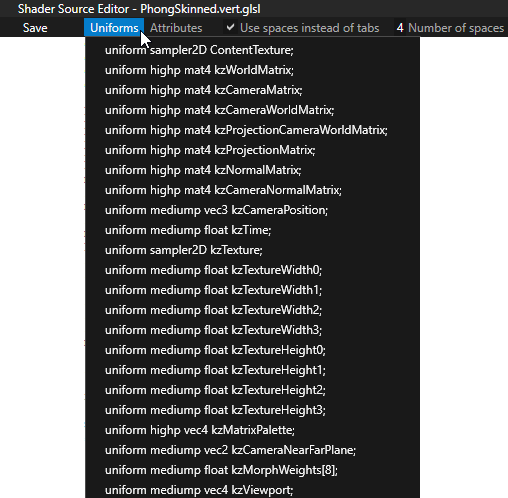

If the name of the uniform matches any of the Kanzi default uniforms, Kanzi Engine automatically sends its value to the shader program. These uniforms are available by default:

| Uniform | Data type | Description |

|---|---|---|

| ContentTexture | sampler2D | A texture provided by the rendered node when rendering, for example the image displayed in an Image node. |

| kzWorldMatrix | mat4 | A transformation matrix to transform from local coordinates to world (global) coordinates. |

| kzCameraMatrix | mat4 | A matrix to transform from world (global) coordinates to view (camera) coordinates, that is, applying camera. |

| kzCameraWorldMatrix | mat4 | Pre-multiplied matrix: kzCameraMatrix * kzWorldMatrix |

| kzProjectionCameraWorldMatrix | mat4 | Pre-multiplied matrix: kzProjectionmatrix * kzCameraMatrix * kzWorldMatrix |

| kzProjectionMatrix | mat4 | A matrix to project view coordinates into screen coordinates |

| kzNormalMatrix | mat4 | A matrix to transform object normals to world coordinates |

| kzCameraNormalMatrix | mat4 | Pre-multiplied matrix: kzCameraMatrix * kzNormalMatrix |

| kzCameraPosition | vec3 | Camera location in world coordinates |

| kzTime | float | Debug timer, 1.0f = 1000 milliseconds. |

| kzTexture | sampler2D | A texture to apply to a brush or material |

| kzTextureWidth0 | float | Width of texture bound in unit 0 |

| kzTextureWidth1 | float | Width of texture bound in unit 1 |

| kzTextureWidth2 | float | Width of texture bound in unit 2 |

| kzTextureWidth3 | float | Width of texture bound in unit 3 |

| kzTextureHeight0 | float | Height of texture bound in unit 0 |

| kzTextureHeight1 | float | Height of texture bound in unit 1 |

| kzTextureHeight2 | float | Height of texture bound in unit 2 |

| kzTextureHeight3 | float | Height of texture bound in unit 3 |

| kzMatrixPalette | vec4 | Array of 4x3 matrices used in vertex skinning. Each matrix is of the form: [ m00 m01 m02 translate_x] [ m10 m11 m12 translate_y] [ m20 m21 m22 translate_z] |

| kzCameraNearFarPlane | vec2 | Near and far plane distances from camera |

| kzMorphWeights[8] | float | Array of mesh weights used in morphing |

| kzViewport | vec4 |

A vector of the form (x, y, width, height) to transform from normalized device coordinates to screen (window) coordinates. Sets the viewport position and size:

|

If the name of the uniform matches any of the property types defined in the material type of the shader, the value is supplied using the properties. At runtime the values for the properties are collected from the rendered material and the lights that match the light properties defined in the material type with possible property overriding.

The names and data types of the uniforms must match the names and data types of the property types in a material type. Note that the display name of a property type can be different from its real name that is used in this context. See Property types. For example, if the material type has a color property type Diffuse, the shader code must have this definition:

uniform mediump vec4 Diffuse;

The letters and the case must match in the names. These are the compatible data types from property data type to shader uniform data type:

| Property data type | Shader uniform data type |

|---|---|

| Float | float |

| Vector 2D | vec2 |

| Vector 3D | vec3 |

| Color | vec4 |

| Vector 4D | vec4 |

| Matrix 2D | mat2 |

| Matrix 3D | mat3 |

| Matrix 4D | mat4 |

| Texture | sampler1D sampler2D sampler3D sampler1DShadow sampler2DShadow samplerCube |

Light property types support uniform arrays. This enables you to use multiple lights of the same type. For example, if one shader program uses two directional lights, you must add an array property type into that shader of the material type:

uniform mediump vec4 DirectionalLightColor[2]; uniform mediump vec3 DirectionalLightDirection[2];

These property types support uniform arrays:

This is an example of a Phong vertex shader used in the Gestures example. See Gestures example.

attribute vec3 kzPosition;

attribute vec3 kzNormal;

uniform highp mat4 kzProjectionCameraWorldMatrix;

uniform highp mat4 kzWorldMatrix;

uniform highp mat4 kzNormalMatrix;

uniform highp vec3 kzCameraPosition;

uniform mediump vec3 PointLightPosition[2];

uniform mediump vec4 PointLightColor[2];

uniform mediump vec3 PointLightAttenuation[2];

uniform mediump vec4 Ambient;

uniform mediump vec4 Diffuse;

uniform mediump vec4 SpecularColor;

uniform mediump float SpecularExponent;

varying lowp vec3 vAmbDif;

varying lowp vec3 vSpec;

void main()

{

precision highp float;

vec3 pointLightDirection[2];

vec4 positionWorld = kzWorldMatrix * vec4(kzPosition.xyz, 1.0);

vec3 V = normalize(positionWorld.xyz - kzCameraPosition);

vec4 Norm = kzNormalMatrix * vec4(kzNormal, 1.0);

vec3 N = normalize(Norm.xyz);

pointLightDirection[0] = positionWorld.xyz - PointLightPosition[0];

pointLightDirection[1] = positionWorld.xyz - PointLightPosition[1];

vec3 L[2];

vec3 H[2];

float LdotN, NdotH;

float specular;

vec3 c;

float d, attenuation;

vAmbDif = vec3(0.0);

vSpec = vec3(0.0);

L[0] = normalize(-pointLightDirection[0]);

H[0] = normalize(-V + L[0]);

L[1] = normalize(-pointLightDirection[1]);

H[1] = normalize(-V + L[1]);

vAmbDif += Ambient.rgb;

// Apply point light 0.

{

LdotN = max(0.0, dot(L[0], N));

NdotH = max(0.0, dot(N, H[0]));

specular = pow(NdotH, SpecularExponent);

c = PointLightAttenuation[0];

d = length(pointLightDirection[0]);

attenuation = 1.0 / max(0.001, (c.x + c.y * d + c.z * d * d));

vAmbDif += (LdotN * Diffuse.rgb) * attenuation * PointLightColor[0].rgb;

vSpec += SpecularColor.rgb * specular * attenuation * PointLightColor[0].rgb;

}

// Apply point light 1.

{

LdotN = max(0.0, dot(L[1], N));

NdotH = max(0.0, dot(N, H[1]));

specular = pow(NdotH, SpecularExponent);

c = PointLightAttenuation[1];

d = length(pointLightDirection[1]);

attenuation = 1.0 / max(0.001, (c.x + c.y * d + c.z * d * d));

vAmbDif += (LdotN * Diffuse.rgb) * attenuation * PointLightColor[1].rgb;

vSpec += SpecularColor.rgb * specular * attenuation * PointLightColor[1].rgb;

}

gl_Position = kzProjectionCameraWorldMatrix * vec4(kzPosition.xyz, 1.0);

}

For details, see the ShaderProgram class in the API reference.